AWS Fargate Terraform Module

On This Page

- Introduction

- Setup Demo App

- Terraform Quick Start

- Logging and Monitoring

- Change Network Settings

- Optimization Settings

Introduction

Use Terraform to create a VPC and Fargate resources, then use the provided images for a React frontend and Python backend to test different Fargate settings. Once you’re familiar the project you can easily use your own images.

The Terraform VPC module is pre-configured to make multiple public and private subnets for Fargate tasks. You can then easily experiment with different networking options like VPC Endpoints and NAT Gateway by changing true or false in variables.tf. You can also change container names, ports, health check settings, Target Group deregistration delay, and more in the same file.

I cover some of these networking modes in more detail in this blog.

My VPC and LoadBalancer modules are required for the networking but they are already imported from Terraform Registry in network.tf

The Terraform modules are pre-configured to work by default so all you need to give Terraform is URIs to the images you will build and upload to the ECR.

Setup Demo App

React and NGINX Image - (link)

NGINX serves the static React files and configured to handle the client side routing. Also in nginx.conf is a proxy_pass to /api and the AWS CloudMap DNS resolver 169.254.169.253 so services can communicate via names like http://frontend:3000 and http://backend:5000

FastAPI Backend Image - (link)

FastAPI with only a root / and /api route setup for responses to frontend API calls.

Test Demo Locally

Clone the frontend project files

git clone git@github.com:ryanef/frontend-ecs-project.git

cd frontend-ecs-project

# if you want to test locally before buiding images

npm install

npm run dev

When dev server starts in the CLI you should see a link like http://localhost:3000 to open in your browser. If the React app loads, build the image and push to ECR.

Build Docker Images and Push to Elastic Container Registry

-

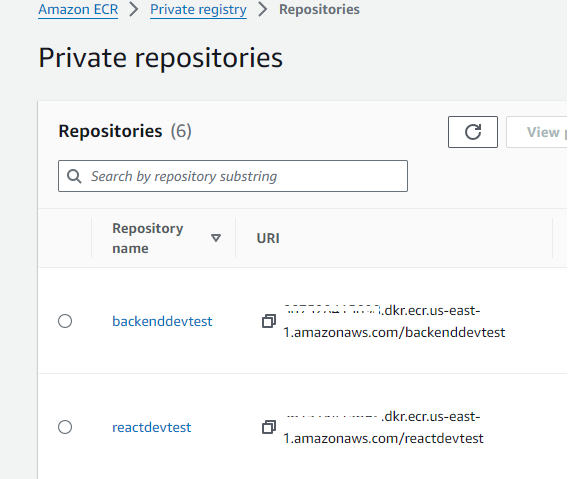

In AWS Console, go to Elastic Container Registry(ECR) and make a repository for the frontend image and one for the backend image. Making a repository in AWS Console only takes a few seconds and they’ll give you exact commands to copy and paste for building, tagging and pushing the images. AWS Docs on creating an ECR repo

-

Use push commands from ECR console or modify the commands below with your REGION and AWS Account Number.

Docker or Docker Desktop must be running and AWS CLI must be installed. You will need to change REGION(us-east-1, us-west-2, etc) and ACCOUNTNUMBER on 3 out of 4 lines below:

aws ecr get-login-password --region REGION | docker login --username AWS --password-stdin ACCOUNTNUMBER.dkr.ecr.REGION.amazonaws.com

docker build -t reactdevtest -f Dockerfile.prod .

docker tag reactdevtest:latest ACCOUNTNUMBER.dkr.ecr.REGION.amazonaws.com/reactdevtest:latest

docker push ACCOUNTNUMBER.dkr.ecr.REGION.amazonaws.com/reactdevtest:latest

Clone backend project files

Put these in an entirely different directory than the frontend files or your image size will be huge.

git clone git@github.com:ryanef/backend-ecs-project.git

cd backend-ecs-project

# if you want to test locally

# i recommend making a python virtual environment

python3 -m venv venv

source venv/bin/activate

pip install -r requirements.txt

uvicorn app.main:app --port 5000

If it works locally, follow the same ECR Push instructions as you did for the frontend. Just be sure to change reactdevtest to backenddevtest or whatever matches your image/repo name in the docker build commands.

IMPORTANT: You will need the URIs for the two ECR repos in the next step.

Create Fargate Infrastructure with Terraform

Clone the Terraform project

git clone http://github.com/ryanef/terraform-aws-fargate

cd terraform-aws-ecs

terraform init

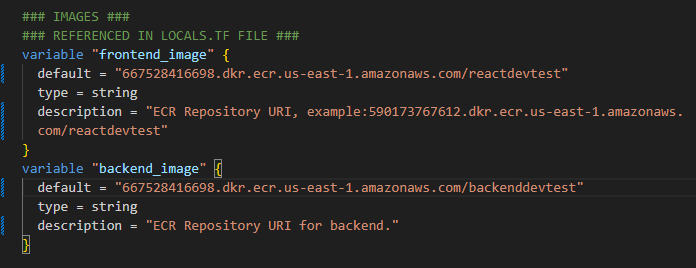

Go to variables.tf and paste the ECR URIs into the variable defaults like below

Settings to Change before Terraform Apply

Go to variables.tf and update frontend_image and backend_image with your ECR Repository URIs. If using VPC Endpoints, the ECR Repository must be private. AWS does not support public repositories with ECR’s interface endpoints. I know that sounds backwards but you can read about it in AWS docs.

Enable NAT Gateway or VPC Endpoints by changing the default values of use_endpoints and use_nat_gateway to true. The variables for vpc_name and environment are used to name resources and tag them.

You will have to make sure Terraform has authentication to AWS. If you’re in an environment where you’ve setup AWS access already then you shouldn’t need to take any extra steps for this. Don’t hard code your AWS Access Keys into anything but you can go to providers.tf and add shared_credentials_file or the profile which you can see in Terraform’s AWS Provider Docs.

Terraform Apply

After adjusting settings and adding your ECR image URIs you’re ready to apply. It’ll take a few minutes since it is creating about 50 resources.

terraform plan

terraform apply

When it is done, it’ll output the DNS address for the loadbalancer and you can visit that address in your browser. You may get a 503 error the first minute or so while the tasks finish launching. After the web application loads, try clicking “Profile” on the navigation menu and see if you get a message from the Python backend. If you see “error in profile” displayed on the page, it’s possible the backend container needs a few more seconds to setup.

Monitoring

VPC Flow Logs, ECS Service and ECS Tasks are all using CloudWatch Logs and can be found with names like vpcName-environment-*. The Application Loadbalancer does not have logging enabled but if you wish to do that it will require making an S3 Bucket. X-Ray will be optional in a future version.

Change Networking Details

If you want to change the default VPC CIDR or add more subnets, go to variables.tf and you’ll see a networking section at the bottom. Refer to the README.MD for a full list of possible subnets that can be used with the default 10.10.0.0/20 CIDR.

Add your own ECS Services / Task Definitions

Go to locals.tf and you’ll see a locals block for the services and the default frontend and backend. To add more, simply add new blocks and change the values to your image link, container name, container port, etc. There’s also a locals block for target_groups and you may need to change the port numbers to match your container ports.

Optimization Settings

Application Loadbalancer

Most of these can be changed in the locals.tf file where the ECS Services and Target Group settings are. The file name is beside the variable names. You may want different settings for different services so keeping them flexible in these locals blocks seems best for now.

healthy_threshold - locals.tf

HealthyThresholdCount: Number of consecutive passing health checks before a target is considered healthy. This is an ALB setting but ECS does check this as a consideration of container health.

Default: 5

Range: 2-10

unhealthy_threshold - locals.tf

UnhealthyThresholdCount: Number of consecutive failed health checks before target is marked unhealthy.

Default: 2

Range: 2-10

interval - locals.tf

HealthCheckIntervalSeconds: The time in seconds between each attempt at a health check.

Default: 30 seconds for ip and instance targets

Range: 5–300 seconds

timeout - locals.tf

HealthCheckTimeoutSeconds: Time in seconds that no response from a target means the health check has failed.

Default: 5 seconds for ip or instance targets

Range: 2-120 seconds

deregistration_delay - locals.tf

AWS Default: 300 seconds, Script Default: 60 seconds

Range: 0-3600 seconds

Running containers are “registered” with the Application Loadbalancer’s target groups which track the IP address and health of targeted containers. When a target is deregistered, that probably means you stopped it on purpose or it errored and crashed. The ALB will stop sending traffic to that target, but there’s a concept of Keep-Alive in HTTP traffic where the ALB will leave existing connections open for a period of time so users with any in-flight requests don’t get suddenly interrupted. ECS will wait on this deregistration_delay time before it forces the container process to be terminated. Most people can significantly lower this unless they have processes or users doing things like large file uploads or some other type of streaming connection.